A whole lot has changed in Unreal in the last few years, I’ve found it interesting to look back over all the things I wanted in the software, and how rapidly it has been advancing.

My obsession with wanting real time edge weighted subdivision surfaces in the engine, for example, has backed off substancially thanks to Nanite!

Nanite does an incredible job of compressing assets, especially if you use Trim Relative Error even a little.

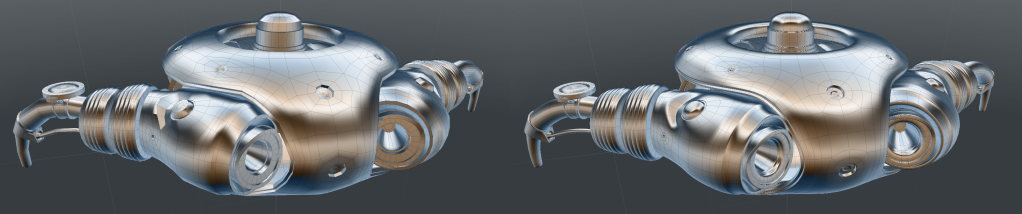

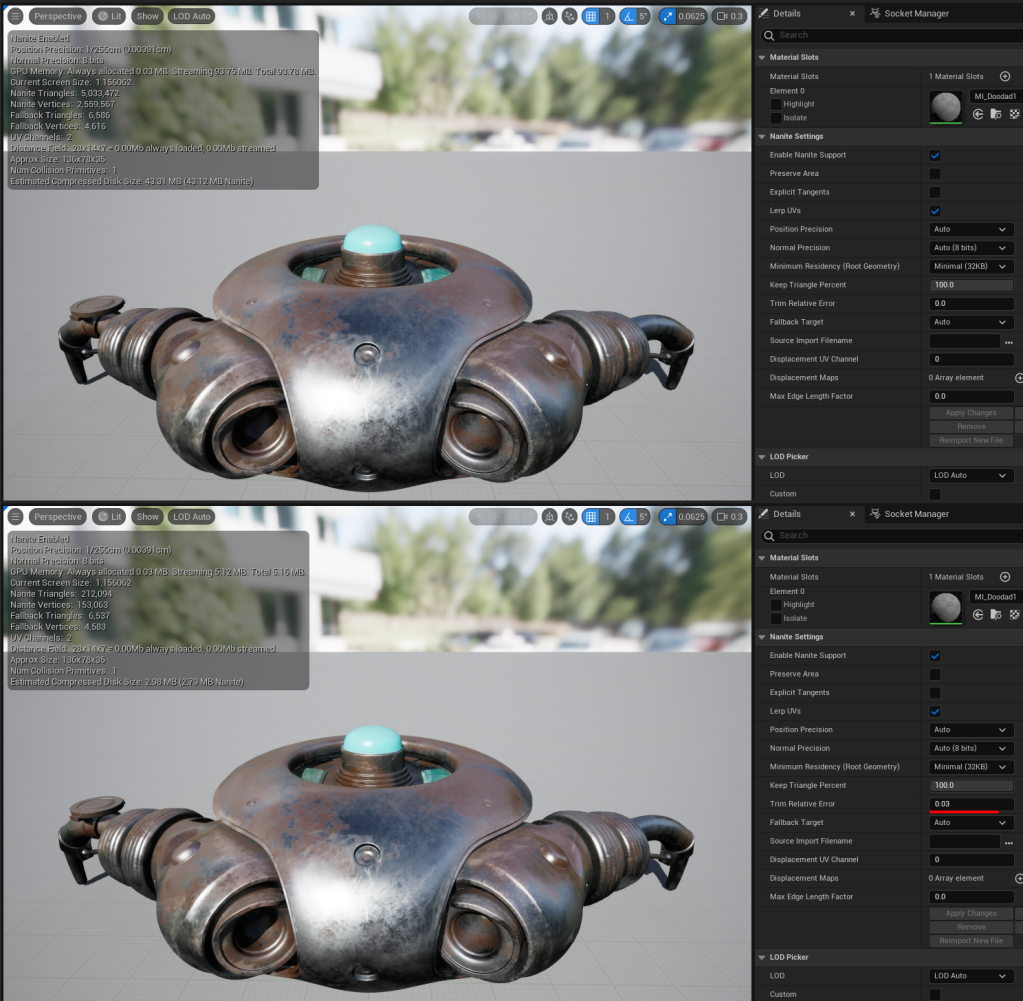

For example, this little robot doodad thingo is about 560 Kb in edge weighted sub-d in Modo:

Subdivide that and import it into Unreal as Nanite, and it is about 90 Mb, but trim relative error takes it down to 5mb, with minimal visual difference:

That mesh is still 10x as big as the sub-d Modo source file.

But whatever you ended up doing to get sub-d working in realtime, you’d be paying some non-zero memory cost for that subdivided asset, unless you are straight up raytracing the limit surface and not generating the triangles at all. And even *if* that is a feasible thing, I have no idea what other costs you’d have…

So yes fine, Nanite has incredible compression, and might get me over my realtime sub-d hangups 😛

Nanite Tessellation rocks!

In 5.4 we get Nanite Tessellation!

It has been demo’d very well in the GDC State of Unreal in the new Marvel Rise of Hydra game, the Preview is out, so I’ve had a few days playing 🙂

It has also been discussed in a bunch of recent videos from Epic that I still need to get around to finding and watching, and will post in the comments at some point…

Why Tessellation? Lots of reasons, but I’ll start with one I really wanted to try out!

So imagine you want to make a 20m high rock cliff in one piece, and have enough ground level vertices for 1st person camera detail, with a vertex every 5 to 10 centimetres. Not really as sensible as building something out of smaller pieces, but not out of the realms of something I’d like to do.

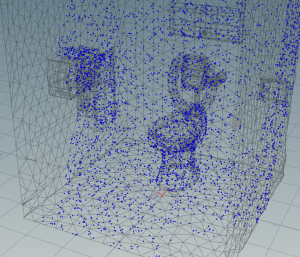

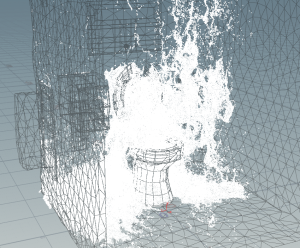

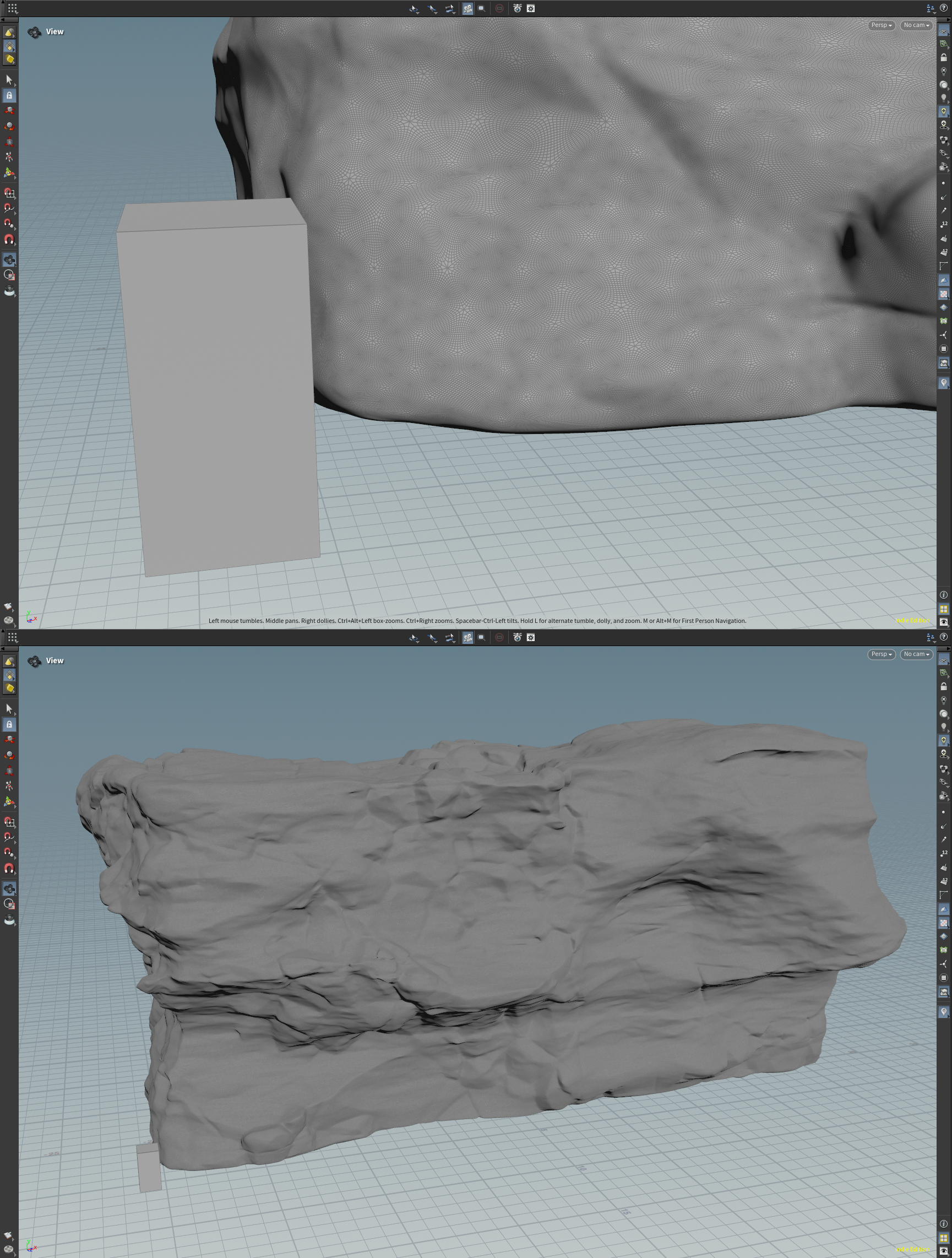

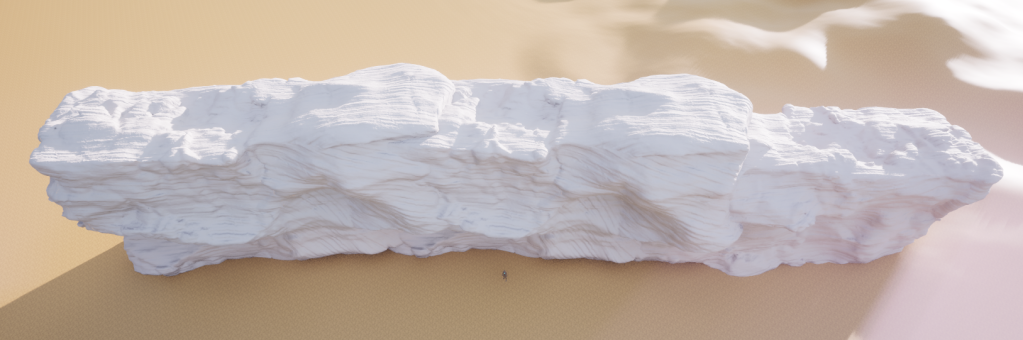

What that looks like in Houdini for my big rock (box for rough human scale):

That is a 70 million vertex mesh, and maybe on the light side of things for close up details tbh…

Can you import that as Nanite? Sure, it will take a few minutes just to save the fbx file for it, it will be 4gb, and good luck if you want to UV / texture it…

Artists find sensible ways around this sort of thing: unwrap a lower poly mesh and project the high poly back onto it, use software like Rizom that handles high vertex counts, if you happen to know you will always be ground level, concentrate the vertex data there and have less up top, etc, etc.

Using the Displacement on import options on Static Meshes (that I believe Epic built out for upgrading Fortnite assets to Nanite) is also an option here.

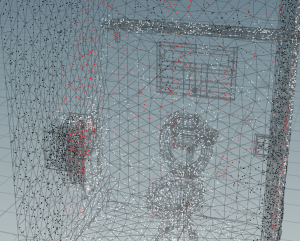

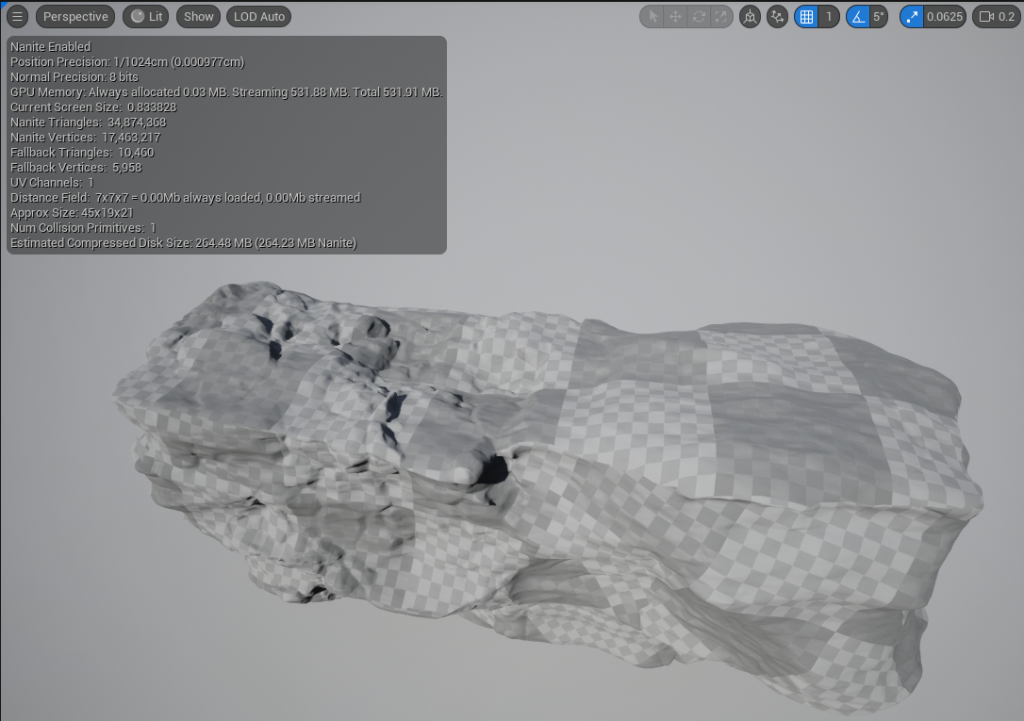

Ignoring that though, importing a mesh this size into Unreal might take 30+ minutes, and you end up with something like this:

531 Mb is still very impressive for that amount of data!

And entirely reducable using Trim Relative Error, but given it takes a long time to regenerate the mesh you might find yourself spending hours tweaking Trim Relative Error to find the right balance, and you will still be sacrificing detail.

Lower base mesh detail with tiling displacement

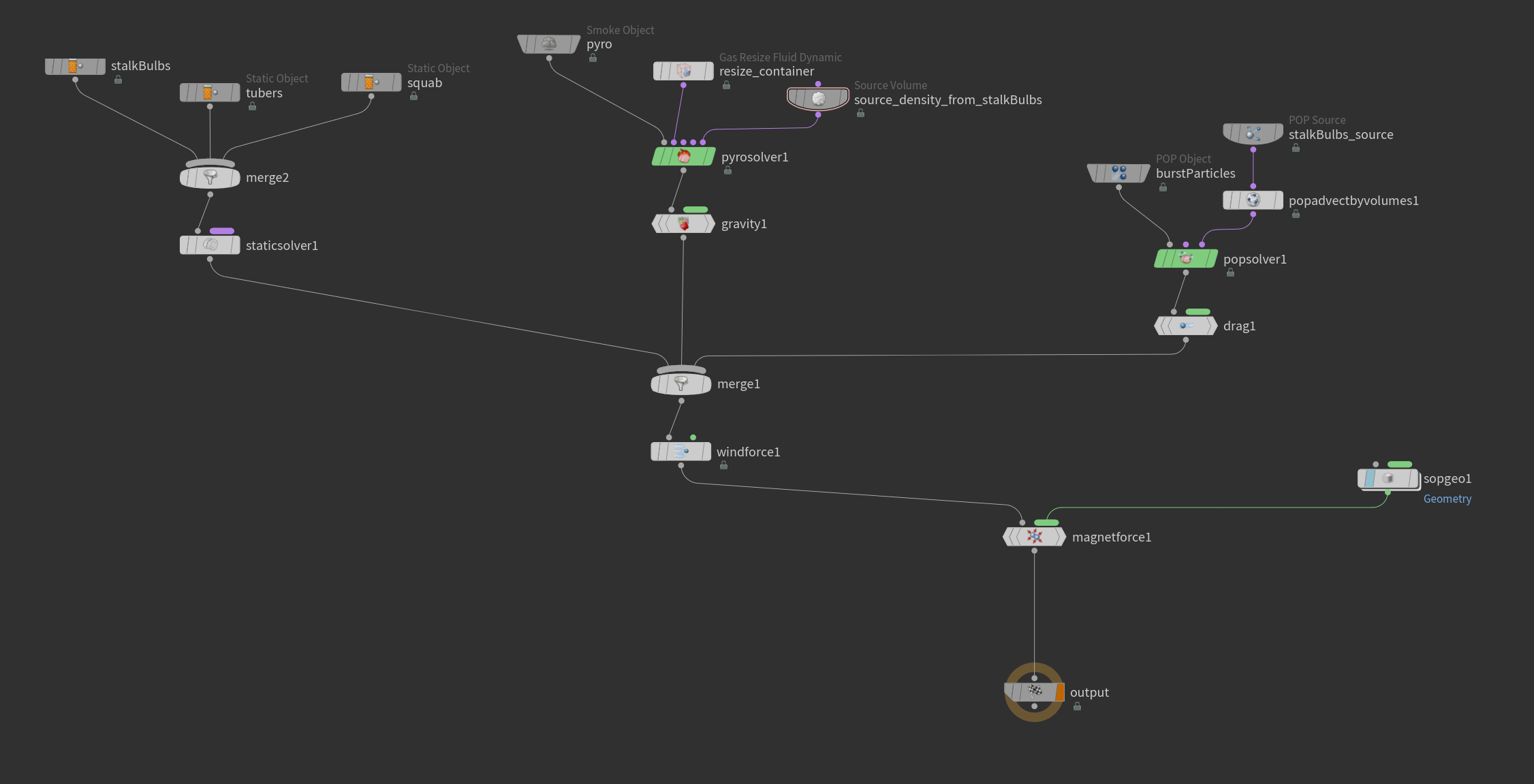

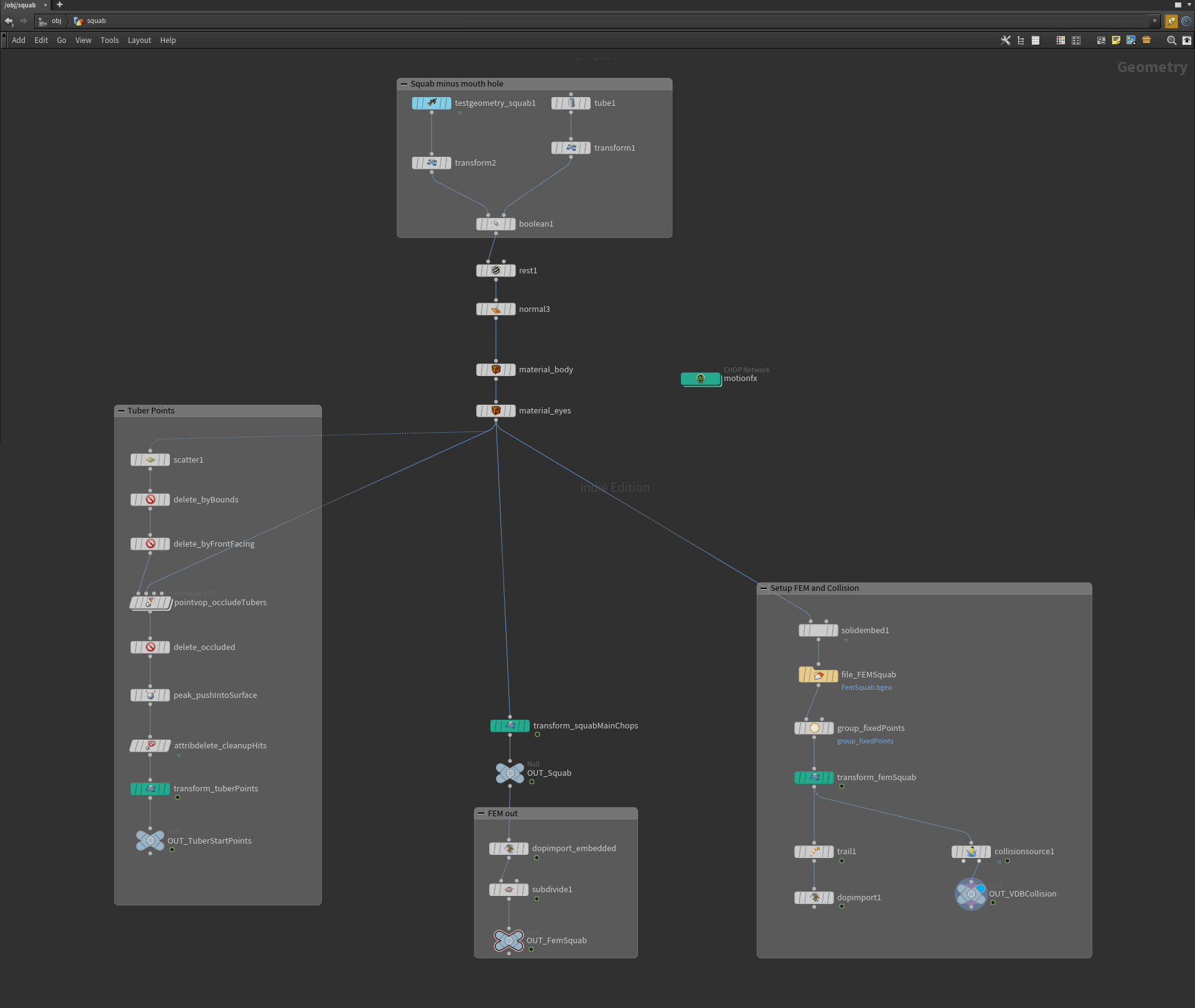

Nanite Tessellation gives us another approach that gives us lower resolution assets to work with in DCC tools like Houdini and Modo, but also the opportunity to create more generic tilable displacement that can be used across multiple assets.

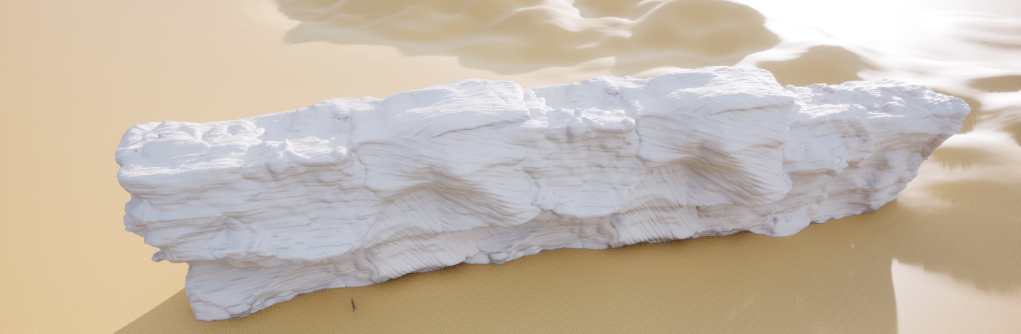

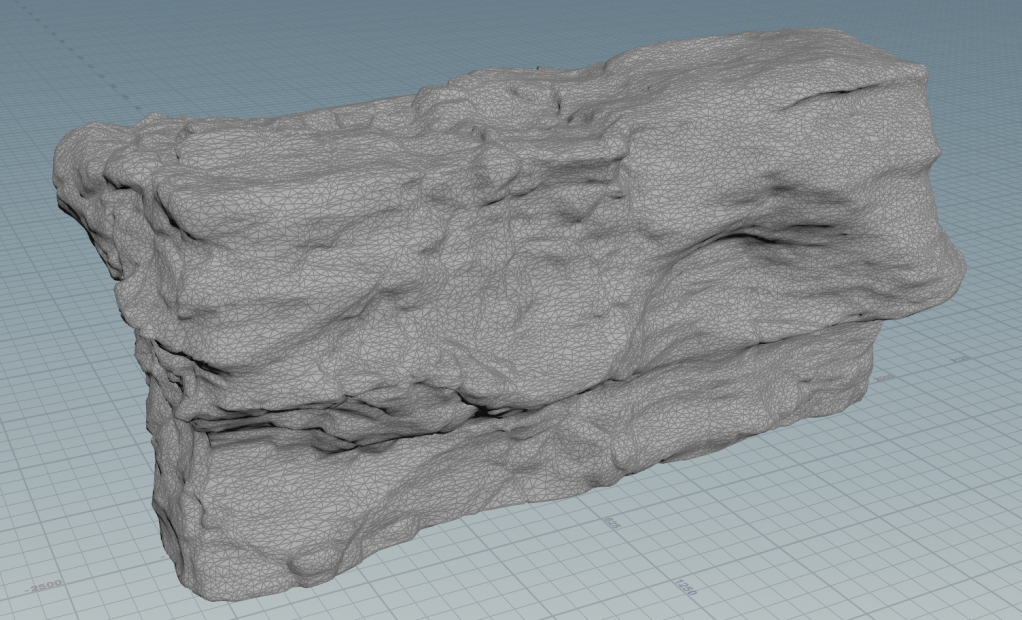

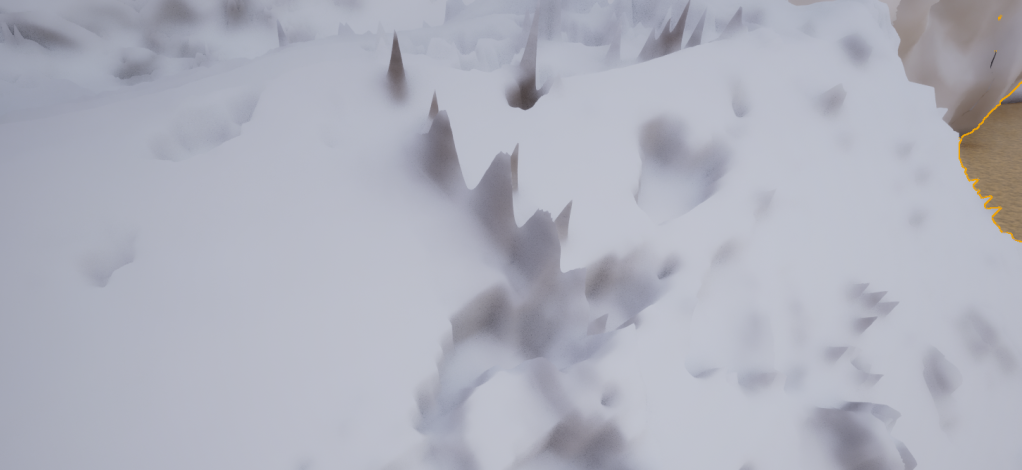

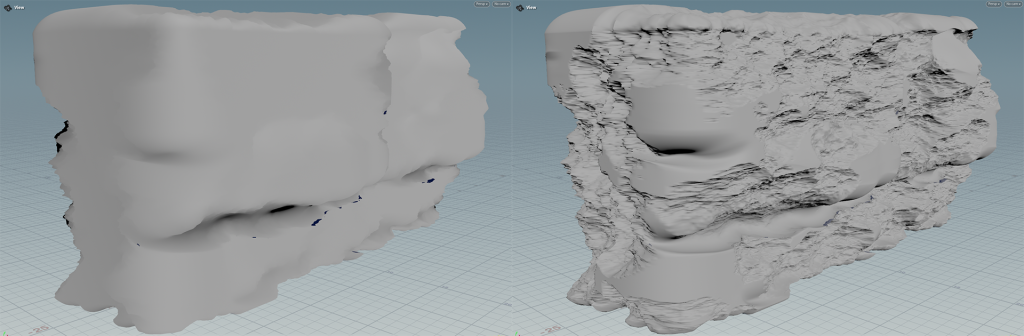

Here is a lower poly version of that cliff mesh that has 2 tiling displacement maps on it, that I will eventually mix with at least one more, and will paint them in and out in different places. But for now, both blending together at different resolutions:

From a ground level, I’m pretty happy with the results, with a bit of work on the displacement maps I think it could look decent!

The base mesh is very reasonable (around 90k Nanite triangles when imported into Unreal)!

At the very least this can be faster iteration, but depending on how much you can re-use displacement textures, it can also be a big disk space and maybe memory saving!

I could move more details into the base mesh given how well Nanite compresses.

But while Houdini has some great UV tools, but they are pretty slow on big meshes, don’t guarantee no overlaps, and I haven’t had a lot of luck fixing that with the various tools available (will try again at some point).

So keeping this low enough that I can manually mark seams works well for me personally:

A mesh this low only took me about 20 minutes to set up seams for, which is not bad at all, and then the subsequent unwrap was pretty clean due to the lower detail, and only takes a few seconds to run the actual unwrap itself.

Not a fair comparison, but that mesh ends up being less than 2Mbs with a bit of tuning Trim Relative Error (which is vastly faster to tune on a mesh this density)!

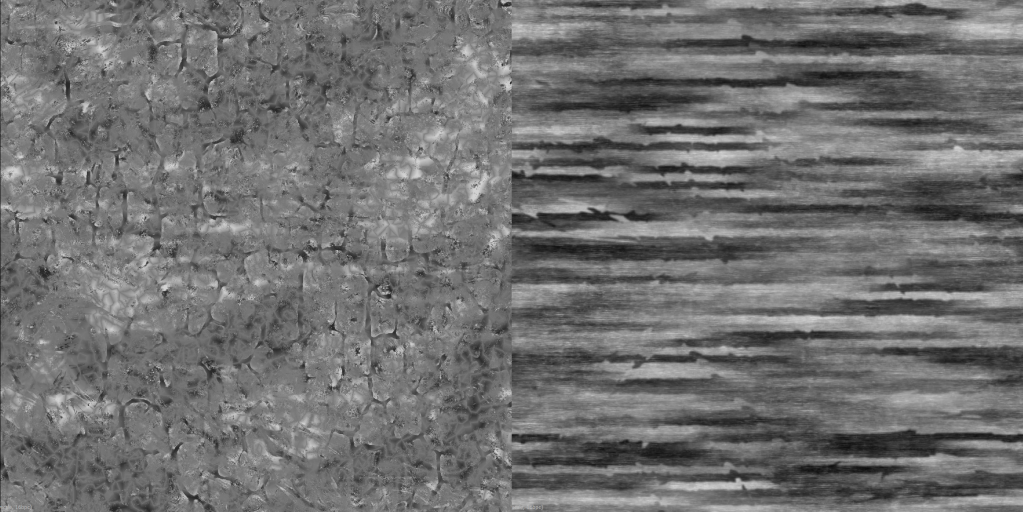

The displacement maps are pretty rough and generated out of Substance Designer using some of the base noises:

I also generated out normals and AO, which for the time being is necessary, I’ll go into that a bit.

Initially when I turned on Nanite Tessellation I wasn’t sure it was working, because it looked like this:

But then it was obvious from the silhouette that it was working, so I wasn’t sure why I wasn’t seeing better details, and it took me a little while to work it out.

The shadows also showed it was doing *something* but it didn’t look quite right:

When you get close to the mesh, Lumen traces start giving you a bit more, and you start to see some detail, so that was another clue that it was definitely working (exaggerated displacement here):

And finally when you look at world normals it is obvious why:

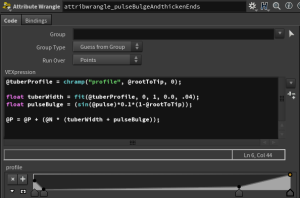

Nanite Tessellation doesn’t update normals (assuming I didn’t mess something up, which is a big assumption…)

I was a bit surprised by this at first, but probably shouldn’t have been, this is also true of Displacement in Houdini if you don’t manually update normals after doing it:

I’m hoping at some point in the future there might be a feature that does *something* with normals, but in the meantime I’d recommend always pairing displacement maps with normal maps.

The other issue you may have noticed in a few of the screenshots is meshes splitting at UV seams:

I think for this reason you’re likely to see Nanite Tessellation mostly used on ground planes, or walls, or anything that can feasibly unwrap in a single UV sheet for now.

But with some better unwrapping than I’ve done, maybe marking up seams with vertex colours in Houdini and then blending the displacement down around them with some sort of falloff, and then also augmenting with a bunch of smaller rock pieces would cover them up.

You may also be able to mitigate this by using projected textures more, assuming you can live with the cost and issues that come with tri-planar or whatever other approach.

Thoughts

Super impressed with Nanite Tessellation!

I probably should have spent more time with it before this post, but I was too excited to try it out and share my thoughts, please comment if I got anything horribly wrong or if you have your own thoughts on some of my assumptions / comments!

I think it’s going to be a really big deal for a lot of teams, and potentially a massive production cost savings. Building a library of great tiling detail displacement maps could really speed up iteration for certain types of assets.

On top of that, I haven’t even started looking at options for animating them!

There is likely all sorts of very fun things you can do with animated displacement maps in Nanite, can’t wait to see what clever artists do with it 🙂